Optimization of web pages?

An article in Ny Teknik in Sweden discuss search engine optimization. Some good ideas, but of course the most important thing is that articles discuss the phenomena. Are the organisations that optimize the pages in combat with Google? Or are they only fighting each other?

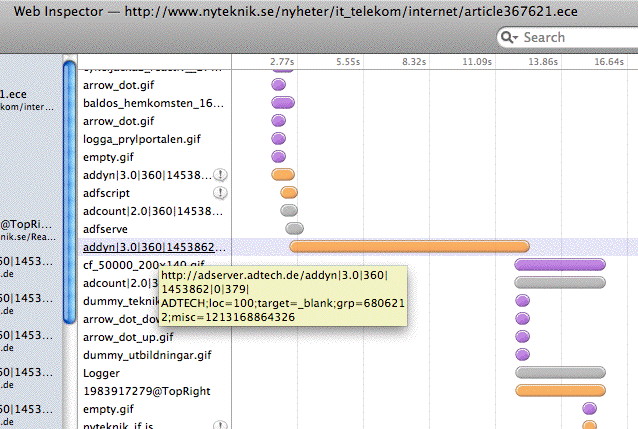

What I also would like Ny Teknik to look more closely at are other ways of optimizing. Optimizing the pages themselves. By for example looking at their own webpage. Just look at how many different domain names and servers the content is stored on. Each lookup in DNS takes time, and each new TCP connection for HTTP takes time. And what is worse, Ny Teknik can not themselves control the experience of the end user. Specifically when external sites are in such bad shape. Have a look at the diagram below and see how slow a specific server is from which Ny Teknik incorporate content.